Research IT

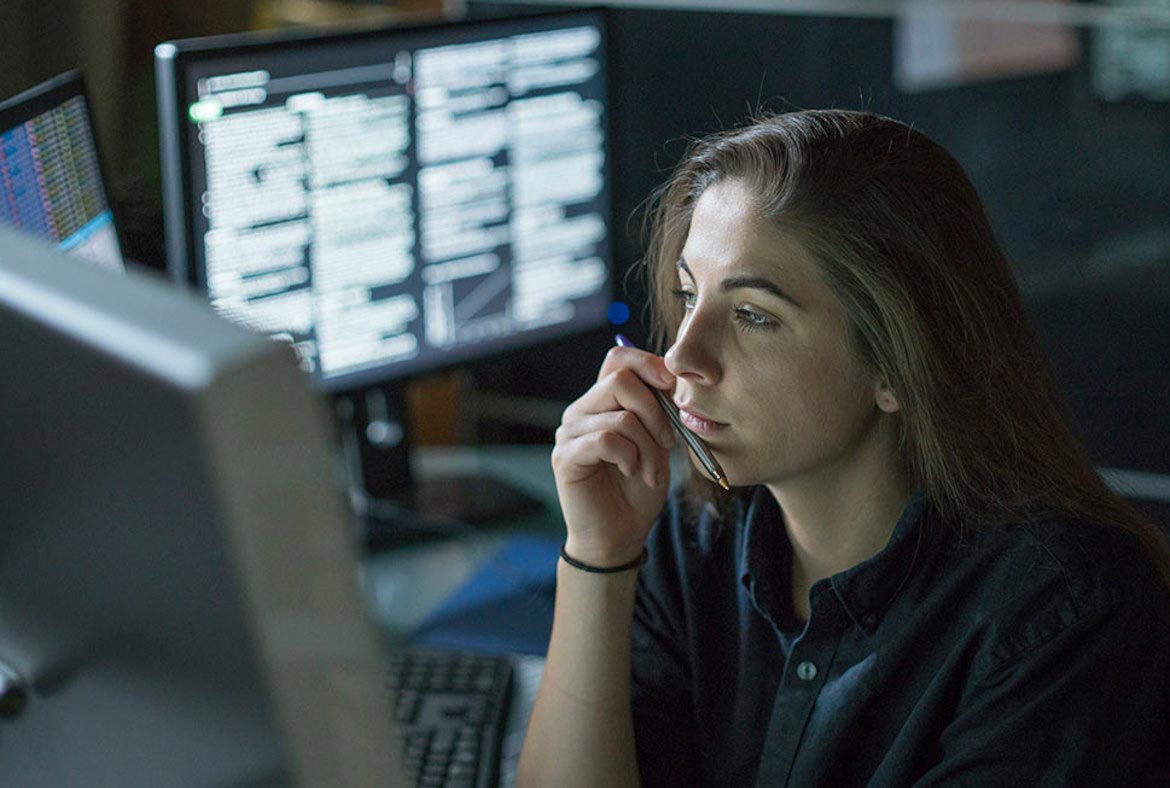

Boosting research quality, output, and impact through swift delivery of technical expertise, advanced computing platforms, consultancy on software development, and workflow optimisation.

Better research, made easy

New starter or expert – take advantage of Research IT's dedicated teams to boost research quality and productivity. Access the best technical expertise or gain an edge through topic-specific networks. Build quality research software with professional software engineers. Employ leading applications and the best platforms for routine or heavyweight research problems. Gain technical proficiencies for roles across academia and industry, or consult to improve grant proposals, research workflows, and recruitment work. Get started today.